Between 2020 and 2022, in collaboration with the Province of Zuid-Holland and WorldStartup, we developed an artificial intelligence system to detect and analyze misinformation about 5G technology circulating on social media. The objective was to equip public institutions with a data-driven tool capable of identifying unreliable information and mapping its spread. This prototype had the final purpose of alerting decision-makers when new misinformation waves began to form.

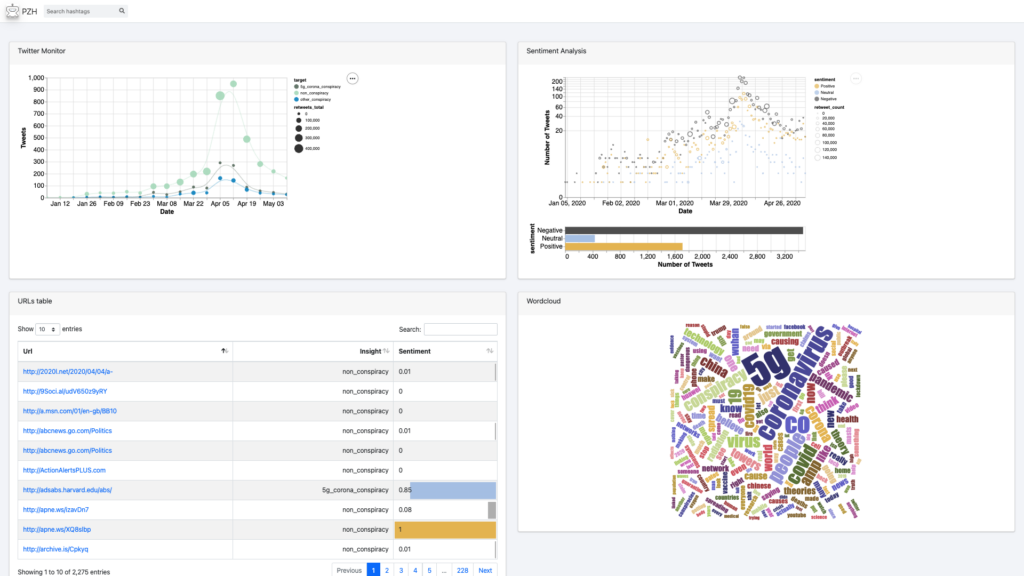

The system combined natural language processing and graph network analysis in a single framework. A proprietary modified BERT model evaluated the semantic similarity between social media content and verified sources, assigning each post a reliability probability instead of a binary “true/false” label. This approach allowed the model to understand subtle differences in tone and context, capturing how misinformation evolves rather than just detecting it after the fact. A simple dashboard was developed in order to monitor Twitter misinformation spreading, containing sentiment analysis, fake news flags, keyword distributions, links to fake news or legitimate sources mentioned in the tweets, and more.

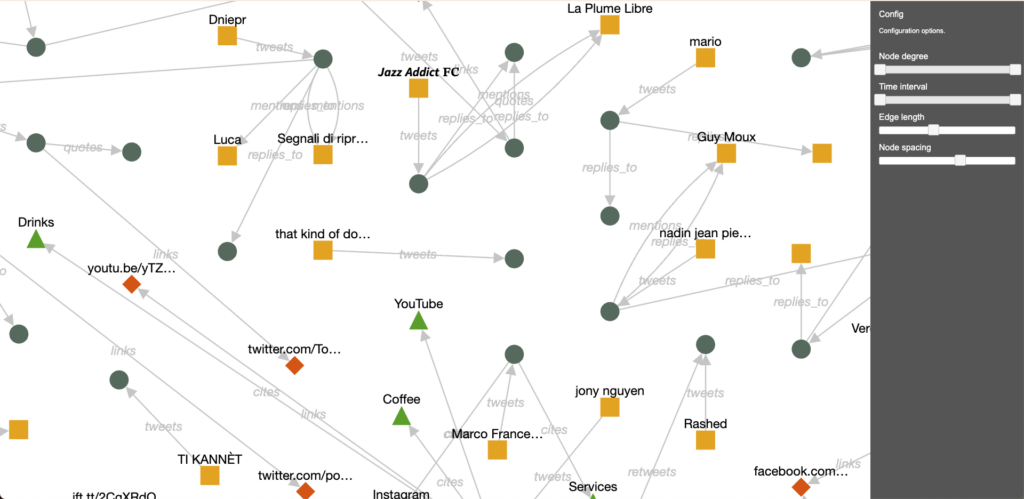

A graph-based engine mapped the online conversations themselves. Every account, link, and hashtag was represented as a node connected by interactions such as retweets, mentions, and replies. This made it possible to visualize the topology of misinformation networks and detect emerging clusters of coordinated activity.

Surprisingly, the analysis revealed that less than 2% of the accounts were responsible for over 70% of the content spreading 5G-related conspiracy theories. These nodes formed tightly connected communities that amplified each other’s posts, while verified sources tended to remain isolated and under-connected. This structural asymmetry helped explain why fake narratives gained traction faster than factual ones.

Our prototype achieved 95% accuracy in distinguishing fake from reliable news, and more importantly, could detect emerging misinformation topics up to 48 hours before they became mainstream. The graph network component also enabled early identification of new clusters forming around unverified claims, effectively turning the system into a real-time early-warning tool for online disinformation. The model was trained and tested in multiple languages (English, Dutch, Italian, and Russian), demonstrating cross-linguistic scalability and adaptability to new topics such as vaccines, elections, or climate-related misinformation.